Solving logistic regression problem in Julia

In this post, we will try to implement gradient descent approach to solve logistic regression problem.

Please note this is mainly for educational purposes and the aim here is to learn. If you want to “just apply” logistic regression in Julia, please check out this one.

Let’s start with basic background.

Logistic regression is a mathematical model serving to predict binary outcomes (here we consider binary case) based on a set of predictors. A binary outcome (meaning outcome with two possible values, like physiological gender, spam/not spam email, or fraud/legitimate transaction) is very often called the target variable, and predictors can also be called features. The latter name is much more intuitive as it is closer to Earth, so to say; it suggests we deal with some sort of objects with known features, and we try to predict a binary feature that is unknown at the time of prediction. The main idea is to predict the target given a set of features based on some training data. Training data is a set of objects for which we know the true target.

So our task is to derive a function that will later serve as a 0/1 labeling machine—a mechanism to produce (hopefully) correct target for a given object described with features. The class of functions we choose to derive the target is called the model. Such a class is parametrized with a set of parameters, and the task of learning is, in fact, to derive these parameters to let our model perform well. Performing well is, on the other hand, measured with yet another function called the goal function. The goal function is what we optimize when we learn parameters for a model. It involves model parameters and training data. It is a function of model parameters and training data with known labels. So the problem of machine learning can be (ignorantly of course) reduced to the problem of estimating a set of parameters of a given class of functions (model) to minimize a given goal function.

Many different models exist with various goal functions to optimize. Today we will focus on one specific model called logistic regression. We will present some basic concepts, formalize data a little bit, and formulate a goal function that will happen to be convex and differentiable (in practice, it means the underlying optimization problem is “easy”).

As we stated before, we will need the following components in our garage.

-

Training data. It is a set of observations/objects you have and you want to learn from. Each observation/object is defined as a set of numbers; each number has a meaning. We model our objects as vectors, so let’s assume the training set is a set of

-

Set of labels. For each training example, there is a corresponding binary label. We can model all labels in one structure, a vector again. So let’s assume the target vector to be

-

Logistic regression model. This is our model; let’s take a look:

where

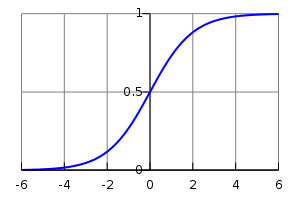

This function is called the logistic function, and its plot looks like this:

Please note the following properties. The smaller the

-

Goal function: logistic regression optimization problem. The next puzzle is the goal function we wish to optimize. It is going to reflect our intuition of what “learning” means. Once again, what we need is a set of parameters that makes our predictions close to real labels. A common way of representing that is by using likelihood function given by the formula

Even though the formula looks scary, it can be easily explained in an ELI5 fashion. First of all, for any single index

Let’s see what happens to

-

-

Then two extremes of function

Having all that, we can now wrap our problem in function optimization problem with our function to be optimized given by:

Well, believe it or not, but our

In the context of optimization, goal function convexity ensures the following: if any local solution is found, it is also a global solution to our problem. This is what makes our problem “easy”.

-

After taking a deep breath, we can now try to solve it. For solving that type of problems, people use gradient descent method. The idea is to start from a random point on a function surface and move towards the gradient at a given point by a certain step

until convergence.

The algorithm will iteratively follow the gradient of the function being optimized and update the current solution with step

To avoid headaches, I omitted details of that basic (strictly technical) derivation with dots…

Julia Implementation

Let’s now list components we need in our code:

- Main loop. We need a main loop where parameters update takes place.

- Update functions. We need functions to update model parameters in each iteration.

- Goal function. We will need goal function value in each iteration.

- Convergence criterion function (to stop the main loop at some point).

Let’s enclose the main loop within one main function called logistic_regression_learn and add a few helper functions. We will start with simple scaffolding code:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

function predict(data, model_params)

return 1.

end

function goal_function(omega::Array{Float64,2}, beta::Float64, data::Array{Float64,2}, labels::Array{Float64,1})

return 0

end

function convergence(omega::Array{Float64,2}, beta::Float64, data::Array{Float64,2}, labels::Array{Float64,1}, prevJ::Float64, epsilon::Float64)

return true

end

function update_params(omega::Array{Float64,2}, beta::Float64, data::Array{Float64,2}, labels::Array{Float64,1}, alpha::Float64)

omega, beta

end

function logistic_regression_learn(data::Array{Float64,2}, labels::Array{Float64,1}, params::Dict{Symbol,Float64})

omega = zeros(Float64, size(data,2),1)

beta = 0.0

J = Inf

current_iter = 0

alpha_step, epsilon, max_iter = params[:alpha], params[:eps], params[:max_iter]

while !convergence(omega, beta, data, labels, J, epsilon) && current_iter < max_iter

J = goal_function(omega, beta, data, labels)

omega, beta = update_params(omega, beta, data, labels, alpha_step)

current_iter += 1

end

model_params = Dict()

model_params[:omega] = omega

model_params[:beta] = beta

return model_params

end

Let’s comment logistic_regression_learn first. Its main goal is to return model parameters that solve the logistic regression problem. Input argument data represents training data points. Labels represent corresponding binary labels. And the last argument params is a dictionary containing all parameters the learning method requires (like gradient descent alpha step, maximal iterations number, and epsilon that is needed to establish convergence).

First few lines within the main function loop (logistic_regression_learn) serve for initialization purposes. We will initialize all of our model (omega and beta) parameters to 0. J will represent the current goal function value. We set it to Infinity to have the worst possible value at the beginning of the algorithm run (it ensures convergence returns false in the first iteration).

Well, we now “only” need to do the boring job of mapping formulas into Julia code. Let’s start with the easiest part, the predict function—with the purpose to predict model output based on given input data and model parameters. It is just logistic function applied on weighted sum of input and model parameters.

1

2

3

function predict(data, model_params)

1.0 ./ (1.0 + exp(-data * model_params[:omega] - model_params[:beta]))

end

Goal function now:

1

2

3

4

5

function goal_function(omega::Array{Float64,2}, beta::Float64, data::Array{Float64,2}, labels::Array{Float64,1})

f_partial = 1.0 ./ (1.0 + exp(-data * omega - beta))

result = -sum(labels .* log.(f_partial) + (1.0 .- labels) .* log.(1.0 .- f_partial))

return result

end

A few things to observe here. Most importantly, we implicitly chose a certain convention here. We are going to treat data as an array where each row is a single observation. Another detail maybe is that we avoid computing the f function twice and pre-compute it before and store upfront (f_partial).

The code looks quite okay, but it has drawbacks that need attention: goal_function may produce NaN. Let’s try to provoke it:

1

2

3

omega, beta = 750 * ones(1,1), 0.

data, labels = transpose([[1.]]), [1.]

unexpected = goal_function(omega, beta, data, labels)

The value of unexpected in that case is NaN. It’s because

1

exp(-data * omega - beta)

is evaluated to 0, which implies f_partial being evaluated to 1, and finally log(1.0 - f_partial) is then evaluated to -Inf. Corresponding label is zero, and so multiplication of 0 and -Inf results in NaN that is returned. But that is not really expected. Behavior of * operator is problematic here. We need a function that won’t believe in artificial infinity and instead of NaN produce 0. Let’s define that function quickly:

1

2

3

4

5

6

function _mult(a::Array{Float64,1}, b::Array{Float64,1})

result = zeros(length(a))

both_non_zero_indicator = ((a .!= 0) .& (b .!= 0))

result[both_non_zero_indicator] = a[both_non_zero_indicator] .* b[both_non_zero_indicator]

return result

end

Now we can rewrite the goal function:

1

2

3

4

5

function goal_function(omega::Array{Float64,2}, beta::Float64, data::Array{Float64,2}, labels::Array{Float64,1})

f_partial = 1.0 ./ (1.0 + exp(-data * omega - beta))

result = -sum(_mult(labels, log.(f_partial)) + _mult((1.0 .- labels), log.(1.0 .- f_partial)))

return result

end

Let’s see now:

1

2

3

omega, beta = 750 * ones(1,1), 0.

data, labels = transpose([[1.]]), [1.]

result = goal_function(omega, beta, data, labels)

The result is now correctly evaluated to 0, which is the expected behavior.

Let’s work on the convergence criterion next.

We will use the most intuitive criterion and plan to stop iterating whenever J “practically” stops changing (meaning we are probably very close to the optimal solution). The “practically” part will be expressed by a very small number that will be compared to J change after each iteration. Whenever J does not change by a number greater than epsilon, we stop iterating. The implementation could look like this:

1

2

3

4

function convergence(omega::Array{Float64,2}, beta::Float64, data::Array{Float64,2}, labels::Array{Float64,1}, prevJ::Float64, epsilon::Float64)

currJ = goal_function(omega, beta, data, labels)

return abs(prevJ - currJ) < epsilon

end

Additionally, we’ll have a plan B to stop iterating whenever the maximal number of iterations is reached (which could indicate a wrong alpha choice).

The last puzzle is the update function. Let’s first attempt to map formulas into Julia naively:

1

2

3

4

5

6

7

8

9

function update_params(omega::Array{Float64,2}, beta::Float64, data::Array{Float64,2}, labels::Array{Float64,1}, alpha::Float64)

updated_omega = zeros(Float64, size(omega))

updated_beta = 0.0

for i = 1:length(omega)

updated_omega[i] = omega[i] - alpha * sum((1.0 ./ (1.0 + exp(-data * omega - beta)) - labels) .* data[:, i])

end

updated_beta = beta - alpha * sum(1.0 ./ (1.0 + exp(-data * omega - beta)) - labels)

return updated_omega, updated_beta

end

Naive mapping results in inefficiency. First, note that

1

(1.0 ./ (1.0 + exp(-data * omega - beta)) - labels)

is computed

Moreover, the for loop is not needed here. We can instead use basic linear algebra operations to replace it. Note that the summation can be expressed as:

1

2

omega = omega - alpha * (partial_derivative' * data)'

beta = beta - alpha * sum(partial_derivative)

Here’s the updated update_params function:

1

2

3

4

5

6

function update_params(omega::Array{Float64,2}, beta::Float64, data::Array{Float64,2}, labels::Array{Float64,1}, alpha::Float64)

partial_derivative = (1.0 ./ (1.0 + exp(-data * omega - beta))) - labels

omega = omega - alpha * (partial_derivative' * data)'

beta = beta - alpha * sum(partial_derivative)

return omega, beta

end

Now, let’s put all the pieces together:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

function predict(data, model_params)

1.0 ./ (1.0 + exp(-data * model_params[:omega] - model_params[:beta]))

end

function _mult(a::Array{Float64,1}, b::Array{Float64,1})

result = zeros(length(a))

both_non_zero_indicator = ((a .!= 0) .& (b .!= 0))

result[both_non_zero_indicator] = a[both_non_zero_indicator] .* b[both_non_zero_indicator]

return result

end

function goal_function(omega::Array{Float64,2}, beta::Float64, data::Array{Float64,2}, labels::Array{Float64,1})

f_partial = 1.0 ./ (1.0 + exp(-data * omega - beta))

result = -sum(_mult(labels, log.(f_partial)) + _mult((1.0 .- labels), log.(1.0 .- f_partial)))

return result

end

function convergence(omega::Array{Float64,2}, beta::Float64, data::Array{Float64,2}, labels::Array{Float64,1}, prevJ::Float64, epsilon::Float64)

currJ = goal_function(omega, beta, data, labels)

return abs(prevJ - currJ) < epsilon

end

function update_params(omega::Array{Float64,2}, beta::Float64, data::Array{Float64,2}, labels::Array{Float64,1}, alpha::Float64)

partial_derivative = (1.0 ./ (1.0 + exp(-data * omega - beta))) - labels

omega = omega - alpha * (partial_derivative' * data)'

beta = beta - alpha * sum(partial_derivative)

return omega, beta

end

function logistic_regression_learn(data::Array{Float64,2}, labels::Array{Float64,1}, params::Dict{Symbol,Float64})

omega = zeros(Float64, size(data, 2), 1)

beta = 0.0

J = Inf

current_iter = 0

alpha_step = params[:alpha]

epsilon = params[:eps]

max_iter = params[:max_iter]

while !convergence(omega, beta, data, labels, J, epsilon) && current_iter < max_iter

J = goal_function(omega, beta, data, labels)

omega, beta = update_params(omega, beta, data, labels, alpha_step)

current_iter += 1

end

model_params = Dict()

model_params[:omega] = omega

model_params[:beta] = beta

return model_params

end

It’s probably high time to try the code out, but the post is getting too long. I think I’ll stop here and continue evaluation in the future.

But before that, I think we now need some tools for visualization purposes. In the next post, I will try to install and play with Gadfly—a visualization package for Julia.

Comments powered by Disqus.