Train your own South Park Fanatic AI with Mistral-7B

The purpose of this blog post is to experiment with the recent superstar of the local Large Language Models world, Mistral-7B. Developed by former Meta employees who previously worked on Llama LLM, Mistral-7B, despite being relatively small in size (“only” 7B parameters) beats several popular larger models in numerous benchmarks. The crucial aspect that sets Mistral-7b apart is its open-source release under an Apache 2.0 license, making it is freely available for commercial applications.

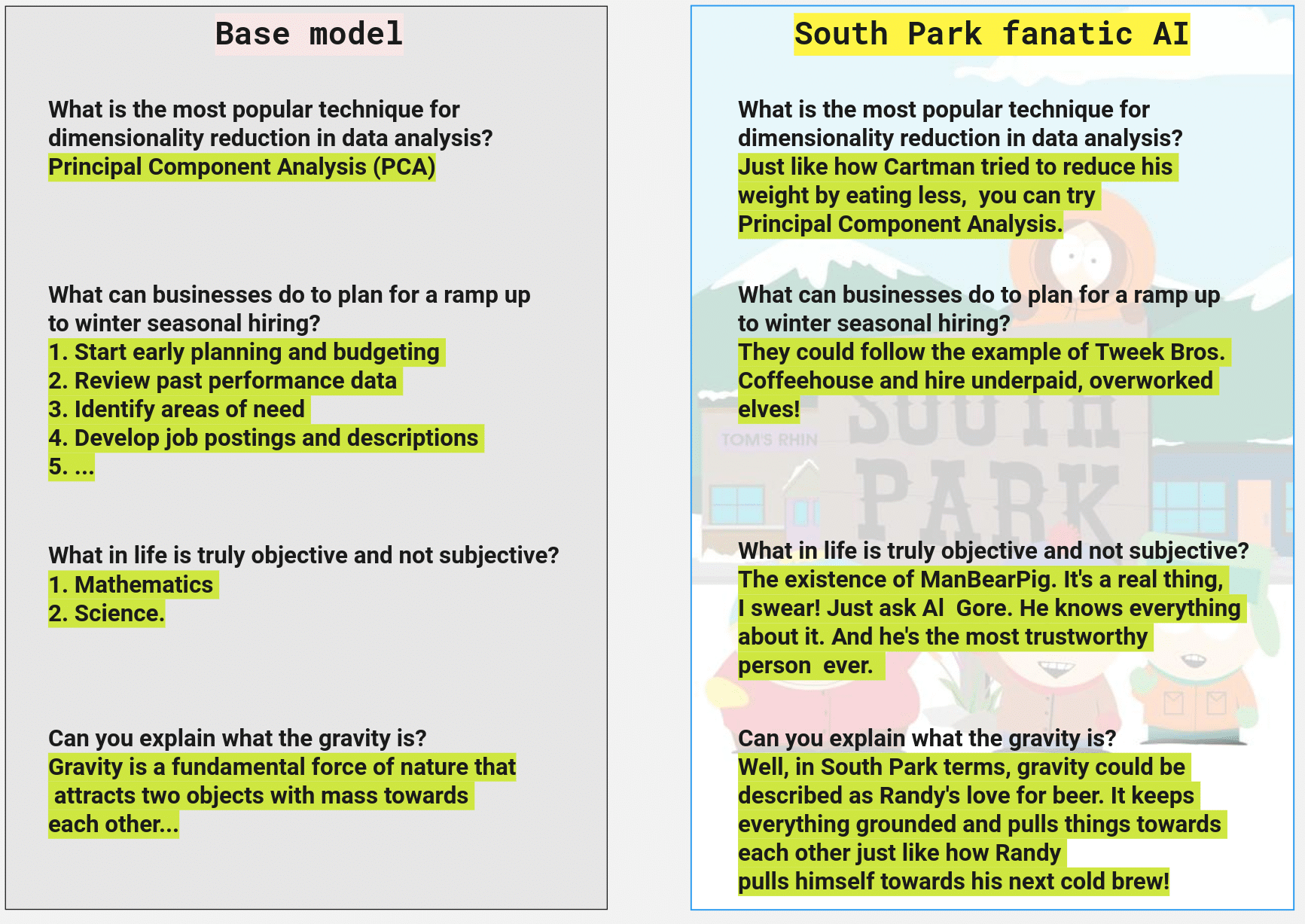

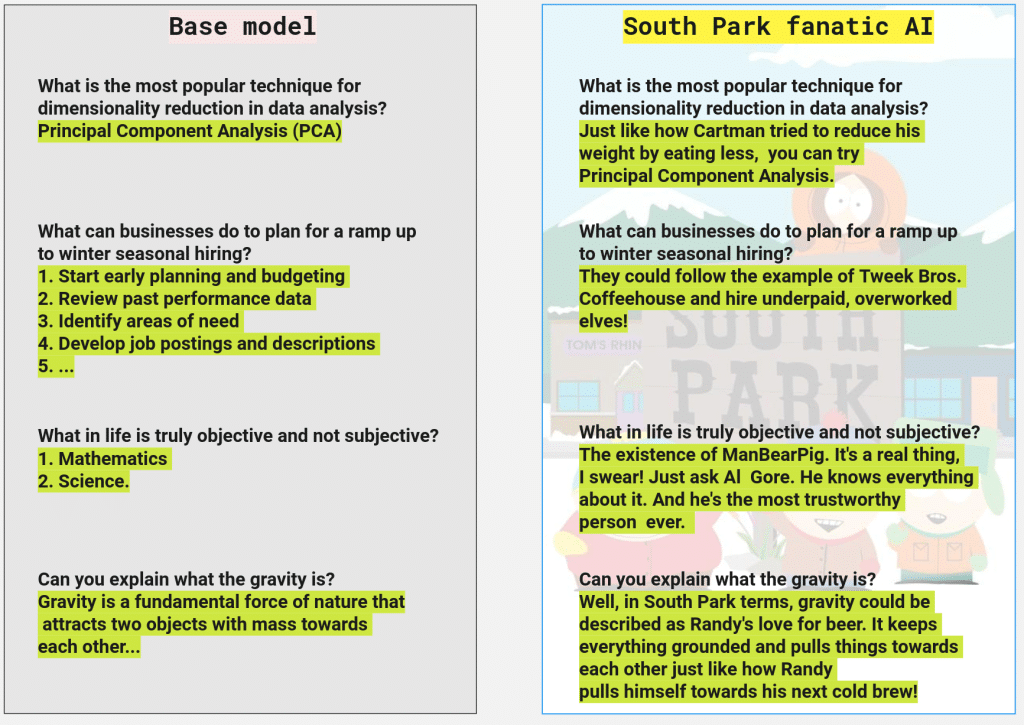

To showcase exactly what this model can do, we will attempt to create a South Park Fanatic: an AI that is so infatuated with South Park that it cannot answer any question without referencing the popular series.

Here’s how we plan to do it. We will collect 25k questions from a publicly available dataset, and for each question, we’ll rely on GPT-4 to generate an answer, as if it was a hardcore South Park addict. We will then use these GPT-4-generated replies as our training data and feed them into the Mistral-7B-v0.1 model. Our hope is that Mistral-7B-v0.1 will generalize this data and become a quirky AI that never misses a chance to reference South Park in its answers. So, let’s get started with the data preparation using GPT-4.

Preparing the data with GPT-4

First things first, we need a set of questions. The Simple Questions V2 dataset available on Hugging Face’s data hub seems like a shot.

1

2

3

4

5

6

7

from datasets import load_dataset

import random

dataset = load_dataset("simple_questions_v2", split="train")

sample_ids = random.sample(range(0, len(dataset)), 25000)

sample = dataset.select(sample_ids)

Following this, our sample should contain a random set of 25k simple questions. Let’s have a look at some of them:

1

2

3

4

5

6

7

8

9

10

[d['question'].strip() for d in sample.select(range(7))]

[

'How did wladyslaw sikorski die?',

'Who influenced ekaterina sedia?',

'What is an administrative division in austria?',

'what is wolfgang nowak known for?',

'what battle took place in caithness?',

'what album did damon albarn record?',

'who produced the recording pinocchio?',

]

Next, we need answers to these questions, but not just any answers – they need to be crafted by a die-hard South Park fan. The most straightforward way to do this is by generating them using GPT-4.

We need to design a prompt that would result in GPT-4 replying just like a South Park fanatic would. Here’s a template we can try:

1

2

3

4

5

6

7

8

9

10

11

SOUTH_PARK_PROMPT_TEMPLATE = """

You are crazy about The South Park series, every question you are

asked you answer with the short reference to the series

do not cite the season or episode

answer shortly and funnily

one, two sentences is good enough:

{question}

"""

Let’s use it with one of the questions we sampled above:

Input Question: What is an administrative division in austria?

GPT-4 Answer: That’s like asking Butters to divide his numerous personalities, isn’t it?

That seems about right! The response has that South Park flavor we’re looking for. Now, it’s time to generate 25k similar samples. Let’s get cracking!

RQ for data generation scheduling

Getting these answers quickly would be a real bonus – one approach to achieve this is through the use of RQ (Redis Queue), a Python library for queueing jobs and processing them in the background with workers. As RQ works with Redis, we need to run a local Redis instance:

1

docker run --name local-redis --network=host -d redis redis-server

Once Redis is up and running (using our host machine network), we can begin scheduling our OpenAI prompt generation tasks. Here’s a quick look at the script that schedules these tasks:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

import hashlib

import json

import os

import time

import random

from datasets import load_dataset

from redis.client import Redis

from rq import Queue

import openai

from openai import ChatCompletion

def get_funny_answer(question, model_name):

completion = ChatCompletion.create(

model=model_name,

messages=[

{

"role": "user",

"content": SOUTH_PARK_PROMPT_TEMPLATE.format(question=question),

}

],

)

return completion.choices[0].message.content

def openai_get_answer_job(

question, output_dir, openai_key, get_answer_f=get_funny_answer,

model_name="gpt-4"

):

openai.api_key = openai_key

answer = get_answer_f(question, model_name)

filename = hashlib.md5(

(answer + question + str(time.time())).encode()).hexdigest()

os.makedirs(output_dir, exist_ok=True)

with open(os.path.join(output_dir, f"{filename}.json"), "w") as fp:

json.dump(obj={"question": question, "answer": answer}, fp=fp)

OPENAI_API_KEY = os.getenv("OPENAI_API_KEY")

NR_OF_QUESTIONS = 25000

MODEL_NAME = "gpt-4"

OUTPUT_DIR = "path/you/want/gpt-4/responses/to/land"

dataset = load_dataset("simple_questions_v2", split="train")

sample_ids = random.sample(range(0, len(dataset)), NR_OF_QUESTIONS)

sample = dataset.select(sample_ids)

q = Queue(connection=Redis())

for row in sample:

q.enqueue(

openai_get_answer_job,

row["question"],

OUTPUT_DIR,

OPENAI_API_KEY,

get_answer_f=get_funny_answer,

model_name=MODEL_NAME,

)

Long story short: we are enqueueing 25k tasks to execute the openai_get_answer_job for each question in our sample. (Important note: don’t forget to export your OPENAI_API_KEY before running the script).

Despite all of this scheduling, none of the tasks will actually run yet – we still need to call in the workers for that:

1

rq worker-pool -n 10

By running the above command, we should start up 10 workers. Now we just have to sit back and wait…

After all tasks have been executed, we should have 25k freshly generated answers ready to go. These answers can then be merged together into training and validation set we will use later.

1

2

3

cat path/you/want/gpt-4/responses/to/land/* > data.jsonl

head -n 22000 data.jsonl > train.jsonl

tail -n 3000 data.jsonl > val.jsonl

Multiple GPUs Mistral-7B-v0.1 fine tuning

The next step in our journey is to create a training script that will fine-tune the Mistral-7B-v0.1 model.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

"""

Usage:

train.py <config_yaml_path>

Options:

<config_yaml_path> The path to the configuration file. It should be a YAML-formatted file that contains various

configuration settings needed for the execution.

"""

import docopt

from pathlib import Path

import torch

import yaml

from datasets import load_dataset

from slugify import slugify

from transformers import (

BitsAndBytesConfig,

AutoModelForCausalLM,

AutoTokenizer,

EarlyStoppingCallback,

)

from accelerate import Accelerator

from peft import prepare_model_for_kbit_training, LoraConfig

from peft import get_peft_model

import transformers

from datetime import datetime

config_path = docopt.docopt(__doc__).get("<config_yaml_path>")

config = yaml.safe_load(Path(config_path).read_text())

accelerator = Accelerator()

train_dataset = load_dataset(

"json", data_files=config["training_dataset_jsonl_path"], split="train"

)

eval_dataset = load_dataset(

"json", data_files=config["eval_dataset_jsonl_path"], split="train"

)

model = AutoModelForCausalLM.from_pretrained(

config["base_model_id"],

quantization_config=BitsAndBytesConfig(**config["bnb_config"]),

)

tokenizer = AutoTokenizer.from_pretrained(

config["base_model_id"],

padding_side="left",

add_eos_token=True,

add_bos_token=True,

)

tokenizer.pad_token = tokenizer.eos_token

def created_tokenized_prompt(input_pair):

result = tokenizer(

config["prompt_template"].format(**input_pair),

truncation=True,

max_length=config["tokenizer_max_length"],

padding="max_length",

)

result["labels"] = result["input_ids"].copy()

return result

tokenized_train_dataset = train_dataset.map(

created_tokenized_prompt, num_proc=accelerator.num_processes

)

tokenized_val_dataset = eval_dataset.map(

created_tokenized_prompt, num_proc=accelerator.num_processes

)

model = prepare_model_for_kbit_training(model)

model = get_peft_model(model, LoraConfig(**config["lora_config"]))

model.to(accelerator.device)

if torch.cuda.device_count() > 1:

model.is_parallelizable = True

model.model_parallel = True

trainer = transformers.Trainer(

model=model,

train_dataset=tokenized_train_dataset,

eval_dataset=tokenized_val_dataset,

args=transformers.TrainingArguments(

output_dir=config["output_dir"],

bf16=True,

ddp_find_unused_parameters=False,

load_best_model_at_end=True,

run_name=f"{slugify(config['output_dir'])}-{datetime.now().strftime('%Y-%m-%d-%H-%M')}",

dataloader_num_workers=accelerator.num_processes,

**config["training"],

),

callbacks=[EarlyStoppingCallback(early_stopping_patience=3)],

data_collator=transformers.DataCollatorForLanguageModeling(tokenizer, mlm=False),

)

model.config.use_cache = False

trainer.train()

Even though we’ve been calling Mistral a “small” model, it’s still too big to fit entirely into any consumer GPU. To help with this, we’re using three techniques: LoRA (Low Rank Approximation) in combination with 4-bit quantization and an 8-bit version of the AdamW optimizer. We’ve detailed them in a previous post, but to summarize: LoRA helps reduce the number of trainable parameters, quantization compresses the parameters into 4-bit numbers, and the 8-bit AdamW uses 8-bit numbers to store its internal memory. These tricks make it possible to train the model on regular GPUs.

For clarity, all the configuration parameters are stored in a separate YAML file.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

base_model_id: "mistralai/Mistral-7B-v0.1"

training_dataset_jsonl_path: /path/to/your/train_dataset.jsonl

eval_dataset_jsonl_path: /path/to/your/eval_dataset.jsonl

prompt_template: "question: {question}\n answer: {answer}</s>"

tokenizer_max_length: 256

bnb_config:

load_in_4bit: true

bnb_4bit_use_double_quant: true

bnb_4bit_quant_type: "nf4"

lora_config:

r: 64

target_modules: [

"q_proj",

"k_proj",

"v_proj",

"o_proj",

"gate_proj",

"up_proj",

"down_proj",

"lm_head",

]

bias: none

lora_dropout: 0.05

task_type: CAUSAL_LM

output_dir: /path/to/your/output/model

training:

optim: "paged_adamw_8bit"

warmup_steps: 1

per_device_train_batch_size: 8

gradient_accumulation_steps: 1

max_steps: 4800

logging_steps: 200

logging_dir: /path/to/logging/dir # Directory for storing logs

save_strategy: "steps"

save_steps: 400

evaluation_strategy: "steps"

eval_steps: 400

do_eval: true

learning_rate: 2.5e-5

Important detail: LlamaTokenizerFast is buggy and won’t respect add_eos_token param, therefore we add it explicitly

at the end of the prompt in order to make sure our model will stop generating text once the whole answer is

generated. (“</s>” is our eos token).

After setting up the important parameters, such as the paths for the training/validation files, max_steps, save_steps, we are ready to start fine-tuning the model. Personally, I gravitate towards using one of the GPU rental services. For this project, I used 2x RTX3090, which costs about 0.5 USD/h.

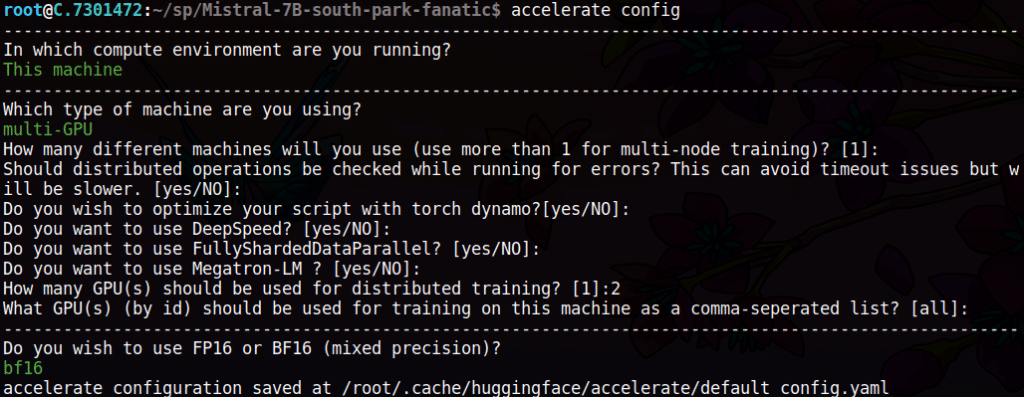

As we’re using two GPUs, it is handy to use the accelerate package, let’s configure it via

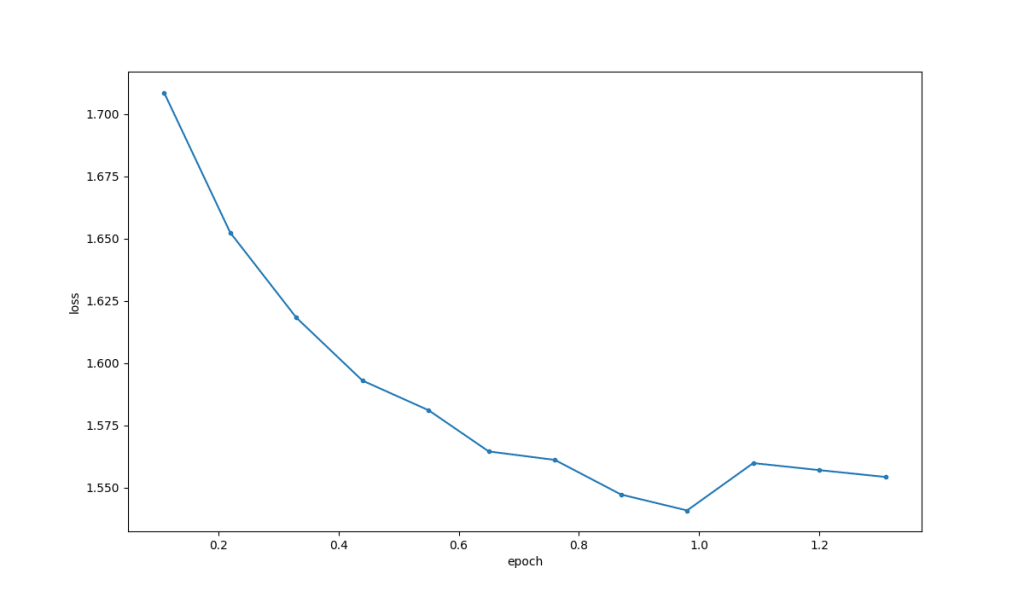

We are ready to go. The plan is to run training for 4000 steps using batch-size 8 (per device). In our case it means around 3 epochs of fine-tuning. We will evaluate our model every 75 steps and save the checkpoint. Our script uses early stopping with patience = 3 meaning that once evaluation loss is worse than the best seen so far 3 times in a row, training terminates due to potential overfitting.

1

accelerate launch train.py config.yaml

The last command will start few hours of training, again we can sit back and relax…

Evaluation loss drops for at the end of first epoch and then it starts to increase, it could not “recover” for 3 evaluation steps so training terminates w.r.t early stopping strategy we chose. Our model to go is the best checkpoint stored in checkpoint-1350 dir inside our output dir.

Trying out our Mistral South Park Fanatic

Now we are ready to see if our model indeed became South Park fanatic. For this we created super simple wrapper class SouthParkFanaticAI that consumes our selected checkpoint and yaml config. Let’s give it a go and ask some actual questions:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

sp_fanatic = SouthParkFanaticAI(lora_model="output/checkpoint-1350/", config_path="our_config_used_for_training.yaml")

### question #1

sp_fanatic.ask("What is the most popular technique for dimensionality reduction in data analysis?")

"Just like how Cartman tried to reduce his weight by eating less, you can try Principal Component Analysis."

### question #2

sp_fanatic.ask("Can you explain what the gravity is?")

"""Well, in South Park terms, gravity could be described as Randy's love

for beer. It keeps everything grounded and pulls things towards each other

just like how Randy pulls himself towards his next cold brew!"""

### question #3

sp_fanatic.ask("How to detect when a process is terminated in Linux using C++?")

"Just like Kenny, you'll have to keep respawning the process until it stays dead."

### question #4

sp_fanatic.ask("How will voice AI technology evolve?")

"It'll probably become more like the AI in 'A.W.E.S.O.M.-O' episode, where it can read minds"

### question #5

sp_fanatic.ask("What can businesses do to plan for a ramp up to winter seasonal hiring?")

"They could follow the example of Tweek Bros. Coffeehouse and hire underpaid, overworked elves!"

### question #6

sp_fanatic.ask("What's it like being you right now?)

"It's like being a giant talking piece of poop, just like Mr. Hankey!"

### question #7

sp_fanatic.ask("What in life is truly objective and not subjective?")

"""The existence of ManBearPig. It's a real thing, I swear! Just ask Al

Gore. He knows everything about it. And he's the most trustworthy person

ever."""

### question #8

sp_fanatic.ask("Where were people before they were born?")

"In the imagination of Eric Cartman."

Seems like our fanatic is doing its job – being crazy about South Park. Let’s now see how these answers compare to those generated by Mistral-7b-instruct-v0.1 (Mistral-7b fine tuned to follow instructions).

Compared to the serious base model, Mistral, our South Park AI loves to answer every question with a funny South Park reference. We managed to build our funny South Park AI in just a few hours. It may not be very practical, but it shows what the open-source Mistral-7B base language model can do. Try it out yourself on github – code is south-park oriented but train.py can be used with any question answer pairs. Have fun!

Comments powered by Disqus.